Senior Lecturer

Robert Stepnoski is a Senior Lecturer at the University of Texas at Austin School of Architecture, where he has taught since 2009.

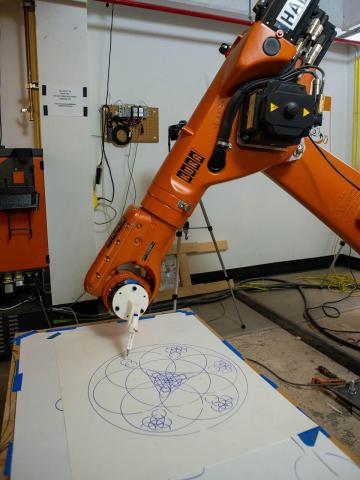

Stepnoski's current research focuses on robotic automation in architectural design and fabrication using KUKA robots. His work integrates industrial robotic systems, and with visual programming platforms such as Rhino3D, Grasshopper, and the KUKA|prc plugin to develop advanced workflows for architectural applications. Stepnoski has authored a growing curriculum of technical lessons and hands-on assignments covering topics including safety protocols, coordinate system setup, KRL (KUKA Robot Language) programming, SmartPAD operation, and parametric motion planning. Emphasizing both physical and digital robotic literacy, his teaching explores applications such as LED light drawing, 2D/3D stippling, pen drawing, foam cutting with hot wire tools, and sand carving with custom end effectors. Through an architectural lens, Stepnoski’s courses aim to bridge industrial robotics and design experimentation, training the next generation of architects to use automation in concept development, prototyping, and full-scale fabrication. His pedagogy combines rigorous safety practices with creative exploration, positioning robotics not just as tools of automation but as instruments of spatial and material investigation.

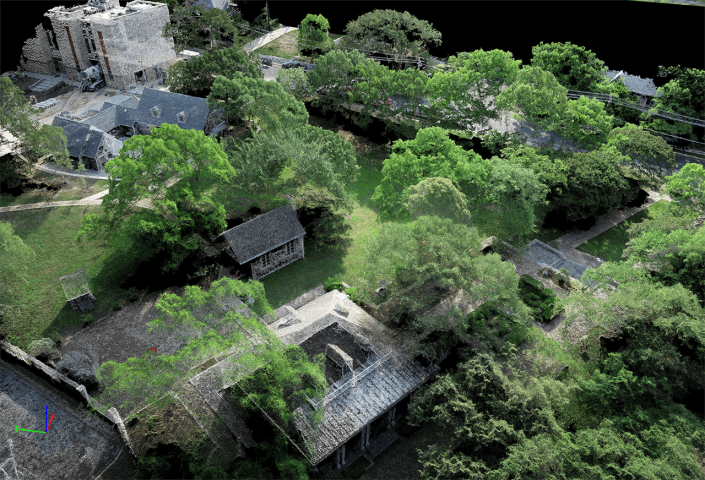

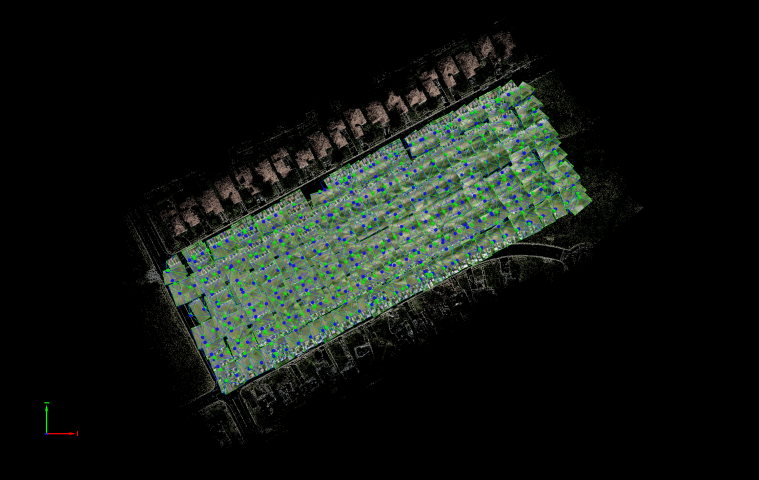

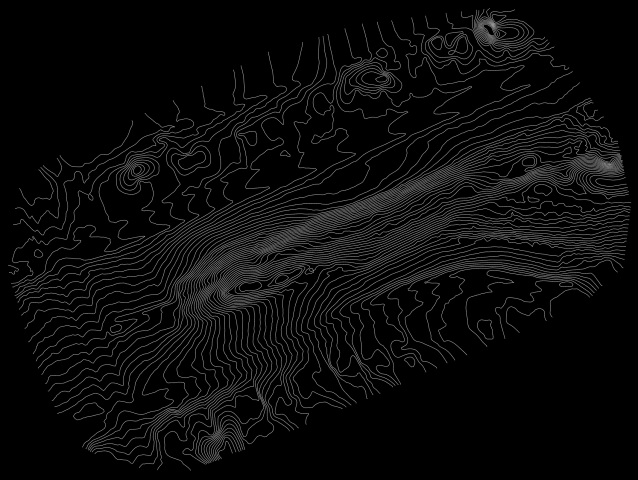

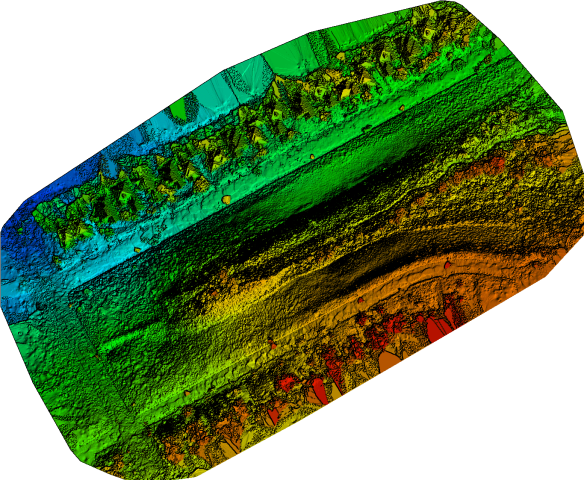

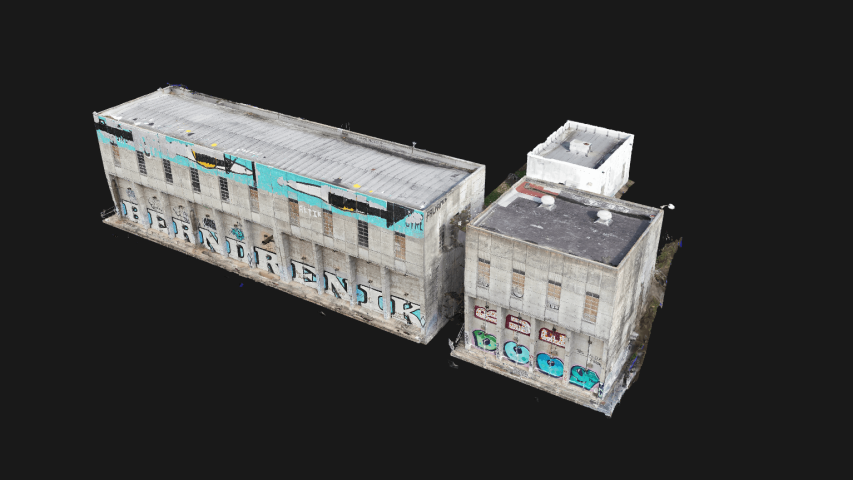

Building on the integration of KUKA industrial robots into architectural design workflows, Stepnoski’s research also extends into the use of unmanned aerial vehicles (UAVs) for mapping, modeling, and site analysis. Where robots like the KUKA KR60 enable precise material manipulation and fabrication at close range, drones provide a complementary large-scale spatial capability, capturing environmental and built data from aerial and terrestrial perspectives. Leveraging photogrammetry and LiDAR scanning, UAVs generate high-resolution 3D point clouds that serve as the foundation for digital twins, topographic mapping, and as-built documentation. These datasets can be processed and visualized in Rhino3D, Pix4D and other 3D modeling platforms, forming a feedback loop between scanned reality and robotic intervention. The synergy between robotic fabrication and drone-based site modeling opens new possibilities in automated construction, context-aware design, and adaptive fabrication strategies, aligning digital tools with both material production and spatial understanding.

Stepnoski’s recent research explores Low-Rank Adaptation (LoRA) modeling, a technique for fine-tuning large language or vision models efficiently by injecting low-rank matrices into pre-trained neural networks. This work is supported by the development of a custom GPU array, designed to optimize parallelized training workflows while managing memory constraints typical of LoRA-based finetuning. By utilizing multi-GPU configurations, the system enables fast iteration across various LoRA configurations and model checkpoints, particularly useful in architectural and robotics applications where domain-specific data is scarce but model precision is critical. The GPU array is tuned to handle dynamic batch sizes, mixed-precision inference, and modular model deployment, providing a flexible environment for pushing LoRA techniques into robotic vision, gesture recognition, and spatial understanding in built environments.

EDUCATION

- Boston Architectural Center; College of Architecture

- College of Technology at Alfred; State University of New York

PUBLICATIONS + PROJECTS

PUBLICATIONS

Book Chapter: "A Point Cloud Pedagogy", AMPS Book Publication. Kent School of Architecture and Planning. Canterbury, UK. 2023. Publication.

“Scan, Immerse & Experience: VR Enabled Field Study.” Journal of Digital Landscape Architecture. Journal of Digital Landscape Architecture, 7-2022. Wichmann Verlag, VDE VERLAG GMBH · Berlin · Offenbach.2020

“Digital Landscape Architecture's Extended Reality”. Platform (Fall 2022), "Teaching for Next", School of Architecture, University of Texas at Austin

Paper: "A Point Cloud Pedagogy", Accepted for AMPS Book Publication. Kent School of Architecture and Planning. Canterbury, UK. Spring/Summer 2022. Publication. Video Submission: A Point Cloud Pedagogy

PROJECTS

Arthur P. Watson House: LiDAR Scan, VR Model/Walkthrough, Documentation, UAV Flight, Aerial/Terrestrial Photogrammetry, 3D Point Cloud Model

Zilker Clubhouse: LiDAR Scan, VR Model/Walkthrough, Documentation, UAV Flight, Aerial/Terrestrial Photogrammetry, 3D Point Cloud Model

Elisabet Ney Museum: LiDAR Scan, VR Model/Walkthrough, Documentation, UAV Flight, Aerial/Terrestrial Photogrammetry, 3D Point Cloud Model

City of Austin; Combined Transportation, Emergency & Communications Center: LiDAR Scan, 3D Point Cloud Model. Documentation.

VR American A-Frame Exhibition: VR Model & Documentation.

Camp Round Meadow: LiDAR Scan, 3D Point Cloud Model. Documentation.

VR Large City Architecture Research Exhibition: VR Model & Documentation.

French Legation State Historic Site: LiDAR Scan, Aerial & Terrestrial Photogrammetry. Documentation.

LBJ Fountain & Field: LiDAR Scan, Aerial & Terrestrial Photogrammetry. Documentation.

Pennybacker Bridge: UAV Flight. Aerial Photogrammetry. Documentation.

UT East Mall: LiDAR Scan, 3D Point Cloud Model. Documentation.

UTSOA Battle Hall: LiDAR Scan, 3D Point Cloud Model. Documentation.

Coconut Club & Neon Grotto: VR Walkthrough. 3D Point Cloud Model. Documentation.

Oilcan Harry's: VR Walkthrough. 3D Point Cloud Model. Documentation.

Courtyard: Scan, Teach, Immerse. 3D Point Cloud Model, Unity3D Immersive Model.

Living Wall: 3D Point Cloud Model, Interactive VR. Documentation .

2021Antheneum: 3D Point Cloud Model, Interactive VR. Documentation. Proof of Concept.

Architectural Library, Reading Room: 3D Point Cloud Model, Interactive VR. Documentation.

El Paso Studio: 3D Point Cloud Model, Unity3D Immersive Model.